Network Engineering Using Autonomous Agents Increases Cooperation in Human Groups

Abstract

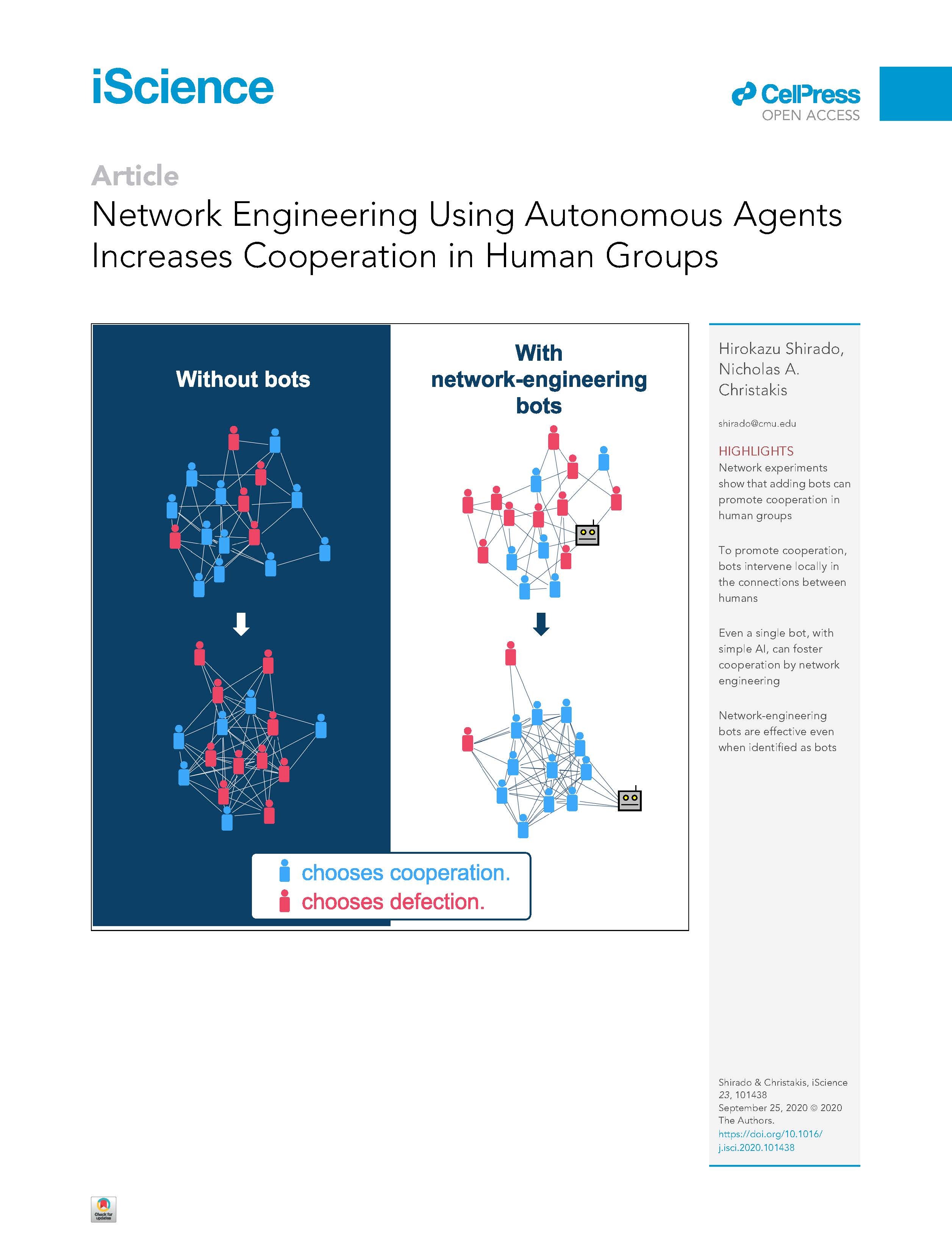

Cooperation in human groups is challenging and various mechanisms are required to sustain it, though it nevertheless usually decays over time. Here we perform theoretically informed experiments involving networks of humans (1,024 subjects in 64 networks) playing a public-goods game to which we sometimes added autonomous agents (bots) programmed to use only local knowledge. We show that cooperation can not only be stabilized, but even promoted, when the bots intervene in the partner selections made by the humans themselves, re-shaping social connections locally within a larger group. Cooperation rates increased from 60.4% at baseline to 79.4% at the end. This network-intervention strategy outperformed other strategies, such as adding bots playing tit-for-tat. We also confirm that even a single bot can foster cooperation in human groups by using a mixed strategy designed to support the development of cooperative clusters. Simple artificial intelligence can increase the cooperation of groups.

Citation:

H. Shirado and N. A. Christakis, “Network Engineering Using Autonomous Agents Increases Cooperation in Human Groups,” iScience, 23(9) (Aug 2020) DOI: 10.1016/j.isci.2020.101438